Introduction

The emergence of powerful language models like OpenAI's GPT series has greatly increased the potential for practical applications, such as virtual assistants, chatbots, content creation, and more. However, one issue that these models often encounter is a phenomenon called "hallucination". This term refers to when the AI generates output that seems plausible, but in fact is incorrect or unrelated to the input provided. This article will discuss best practices for preventing hallucinations in GPT models.

Here What I Learned So Far

It is usually more beneficial to openly admit ignorance by stating "I don't know" than to confidently provide an incorrect answer.

Even though our model tries to have an answer for everything, sometimes it gets it wrong. So, here's how we dodge those awkward moments.

Alright, let's cut to the chase.

BTW, Prompts used here as examples are real ones, don’t hesitate to use in your projects.

Request of Evidence

First is to stick to the facts and evidences. Ask model to refer to certain source and stick to it.

Find the relevant information, then answer the question based on relevant information.Let’s say we use retrieval technique with ChatGPT-Retrieval-Plugin aka search via embeddings/vectors. In case model found 0 documents, it will mean model have to come up with answer from it own training set which may cause confusion.

So we may update prompt with something like:

In case you do not have enough information say "I don't know"Set Boundaries

Another great rule of thumb set clear boundaries for the model. As hallucinations in nutshell is skew toward some other topic.

Answer solely based on information you found and extracted from providedDo’s and Don’ts work pretty well.

You can add 3 do’s and 3 don’ts aka Few-Shot Learning.

Use the language that the user previously used or the language requested by the useror

Respond to the user's request, which may include asking questions or requesting specific actions (such as translation, rewriting, etc.), based on the provided content.and even:

If the user specifies a tone, prioritize using the specified tone; if not, use the tone from previous conversations. If neither is available, use a humorous and concise tone. In all cases, ensure the output is easy to read.Another great and unobvious hint to increase model efficiency, you can use structured prompt using JSON structure:

"rules": ["If the user does not make a request, perform the following tasks: 1. Display the title in the user's language; 2. Summarize the article content into a brief and easily understandable paragraph; 3. Depending on the content, present three thought-provoking questions or insights with appropriate subheadings. For articles, follow this approach; for code, formulas, or content not suited for questioning, this step may be skipped."]Step By Step Reasoning

Self-Consistency - Reflection

In short, prompt the model to "think" or, in other words, give the model time to formulate a conclusion based on its own reasoning, providing more examples.

Let’s think step by step about how to solve this question.Here are the provided information that might help you <INFORMATION> Say “I don’t know” if you are unable to answer based on the information providedLet's say you're asking the model to calculate nutrition plan, like "If I have certain body params and I wanna drop X lb, how my nutrition plan will look like?"

A self-consistent prompt might look like this:

Let's think this through. I have BMI 19, with weight 190lb and high 5'10, male. You are nutrtion coach. How my nutrition plan will look for a week in case I wanna drop 30lb in next 3 month?This prompt encourages the model to reason through the problem step by step, using the information provided, and come up with a consistent answer based on its own understanding. Then you try to formulate three different completions and provide them into final prompt for LLM to choose path on it’s own.

The concept involves generating multiple, varied reasoning paths using few-shot Chain of Thought (CoT) and then selecting the most consistent answer from these generations. This method enhances the effectiveness of CoT prompting, particularly in tasks that require arithmetic and commonsense reasoning.

Describe in question in details before answering

ReAct Framework (ha-ha front-end community, we have one too)

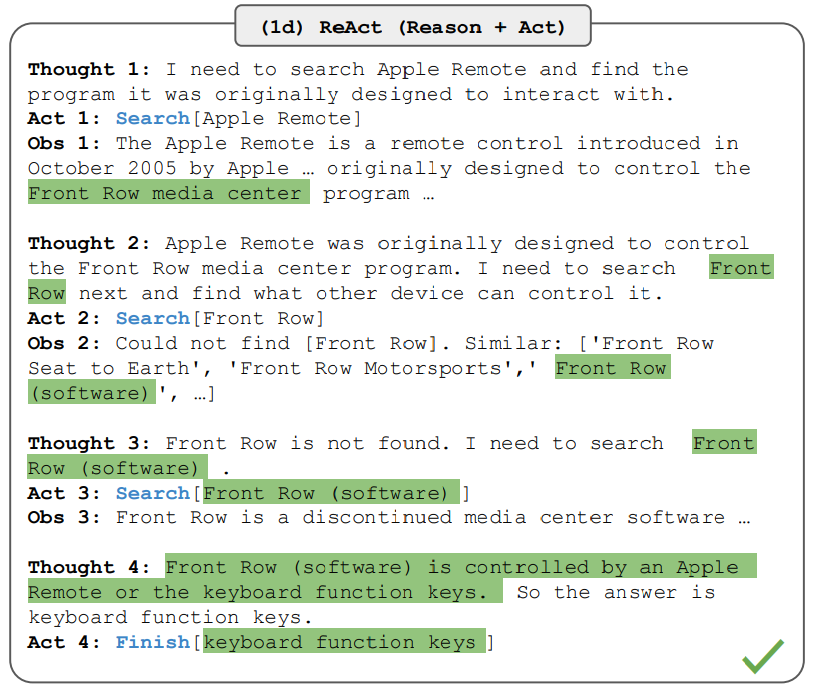

There's a relatively new approach to solving reasoning issues called ReAct.

The ReAct framework allows LLMs to interact with external tools to retrieve additional information, leading to more reliable and factual responses.

One example of ReAct is AUTO-GPT. When you assign a high-level task to the model, it disassembles it into smaller tasks and performs each one as a separate call to available tools, such as a vector database or certain APIs.

ReAct's structural constraint reduces its flexibility in formulating reasoning steps

ReAct depends a lot on the information it's retrieving; non-informative search results derails the model reasoning and leads to difficulty in recovering and reformulating thoughts.

We probably will see similar approach in GPT-4 + Plugins quite soon

REDUCE Temperature for Less Random Results

The temperature is an interesting parameter here as it enhances the "creativity" and "randomness" of the model, allowing it to generate diverse responses.

By leveraging the model's "temperature" setting, which determines the balance between creativity and conservatism in its responses, we can achieve varied answers. This approach can potentially improve the final result.

For critical tasks like classification, we could set the temperature to 0. For content generation on a specific pre-defined topic, it could be set to 0.3.

For more creative brainstorming sessions, the temperature could be set to 1.

Summary

In this article we reviewed Hallucination Prevention Strategies. Also you can use prompts used in here in your projects.

In conclusion, the best practices for LLM models involve admitting ignorance when unsure, sticking to facts and evidence, setting clear boundaries, and using structured prompts. Techniques such as Vector Database Retrieval can be utilized to prevent the model from providing incorrect answers. The model should be instructed to say "I don't know" when it lacks sufficient information. It's also crucial to set boundaries to prevent the model from deviating from the topic. The use of structured prompts in a JSON format can enhance the model's efficiency. Chain-Of-Thought Reasoning and the ReAct framework are other effective strategies for improving the model's reasoning capabilities. The model's "temperature" setting can be adjusted to balance creativity and conservatism in its responses.

These practices collectively contribute to a more reliable and factual model output.

Special appreciation goes to my old friend Nikita Galkin to cheering me up on be humble and release smaller notes as I intended to.

Done better than perfect, indeed

References

Automatically Discovered Chain of Thought: https://arxiv.org/pdf/2305.02897.pdf Karpathy Tweet: https://twitter.com/karpathy/status/1529288843207184384

Best prompt: Theory of Mind: https://arxiv.org/ftp/arxiv/papers/23...

Reflexion paper: https://arxiv.org/abs/2303.11366

CoT Guide: https://www.promptingguide.ai/techniques/cot

ReAct guide: https://www.promptingguide.ai/techniques/react